I have another site that is fairly small and uses a responsive design template. I decided to convert that site to use https with certbot which is EFF’s Client for Let’s Encrypt Let’s Encrypt.

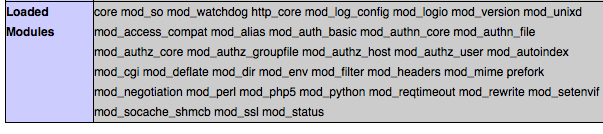

I had previously played around with using a self-signed certificate so I went to /etc/ssl and removed all of the keys for the site. Then I followed the directions on the certbot site to let it guide me through the process. I noticed when I removed the old certificates that the directories were owned by root and that I couldn’t cd into the directories without first changing to root. Thinking that certbot would put the new certificates in the same place (which it doesn’t) I decided to run the commands as root. So after downloading certbot-auto and changing the permissions, I changed to root by using ‘sudo su’. The bot is really smart about looking at your system and figuring out which domains you are hosting and which already have certificates. It appears that it looks at the sites-available files and if it finds a section for https (set off with <VirtualHost *:443> ... </VirtualHost> it assumes that there is a certificate already in place. So the domain I was targeting didn’t show up in the list. Removing that section from the file and running certbot again found the site.

Certbot doesn’t use that section so after the installation it added these lines to the sites-available file.

RewriteEngine on

RewriteCond %{SERVER_NAME} =wellgolly.com [OR]

RewriteCond %{SERVER_NAME} =www.wellgolly.com

RewriteRule ^ https://%{SERVER_NAME}%{REQUEST_URI} [END,QSA,R=permanent]

I did not have to restart Apache. When I visited the site I noticed that it was not displaying properly. The cause was http links in the header for fonts and bootstrap. Changing all of the links to https fixed the display problems.

The next thing was to look for all of the internal links that needed to be changed to https. I used the link checker at W3.org to check for links that I missed. Finally I went to SSL Labs and tested the site. It got an A+.

Unlike the other certificates which are stored in /etc/ssl, certbot puts all of its stuff in /etc/letsencrypt/live/.

Also, rather than adding the section with the https port (443) to your vhost file, it creates a separate one for https. It uses the same name and appends ‘-le-ssl.conf’.

My site is fairly straightforward, but you may need to add things to this file if you have a more complicated setup.

If you have more than one domain that points to the same site, you can use the same certificate. If www.myreallylongdomainname.com points to www.mydomain.com then after you set up the certificate for one domain, you can use the same certificate for the others. Unlike when setting up the original certificate, you do need to restart Apache for the changes to take effect.

sudo ./certbot-auto certonly --cert-name mydomain.com -d mail.mydomain.com,www.mydomain.com,www.mydomain.com,myreallylongdomainname.com,www.myreallylongdomainname.com

service apache2 restart

IF you have lots of sites on the same server you can see which ones you have certificates for by running the command:

sudo ./certbot-auto certificates

You will get an email when the certificate is set to expire. CD to the directory where certbot-auto is located and run

sudo ./certbot-auto renew

You can also check with an entry to a cron job. I set up one to run monthly. It looks like this:

0 0 1 * * /home/userid/certbot-auto renew -q